The Art of Giving and Receiving Code Reviews (Gracefully)

Monday, June 25, 2018

The research on code reviews is pretty clear: they reduce code defects at an incredible rate, typically around 80% to 90%1. Harder to measure, but clearly valuable, are the learning opportunities of reading others’ code and having others read your code, the enforcement of readable code, and the maintenance of code standards and consistent architectural patterns. But stories of absent or dysfunctional code reviews are prevalent, and this is hardly surprising when you consider the psychological challenge they present. Not talking about these challenges represents a real missed opportunity – not only to optimise the efficacy of the code review process, but also to maintain happy, collaborative working relationships.

Source: xkcd.com

Ownership & Authorship

‘Egoless’ programming is a commendable aspiration, but if the expected outcome of following such1 principles is that programmers will be able to be objective about their work, or able to accept criticism without emotion, that’s not realistic. Consider the finding from behavioural economics that people ascribe more value to things merely because they own them. The so-called ‘endowment effect’ is demonstrated by randomly gifting an item to half the study participants, and then observing how highly participants value the item (either by asking them to rate it, or by inviting them to trade it) – owners will routinely value items more highly than non-owners. It’s not a question of being objective; ownership simply changes value assessments in a predictable way.2

Of course, ownership in the sense of authorship is a different phenomenon, but it seems only right to suppose that authorship has an even more profound effect on value assessment. You most likely identify strongly with your work and the choices you make in your code; coding is after all a form of artisanship and it’s only natural to take pride in your craft.

Worthwhileness vs Conflict Potential

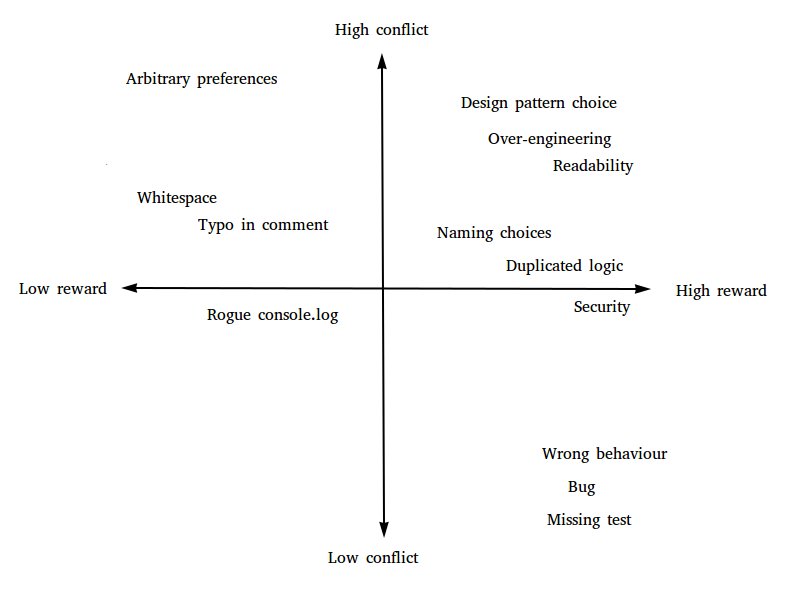

Not all code review comments have the same psychological features and some might be easier to be objective about than others. I think of comments as falling somewhere along two axes: worthwhileness and conflict potential.

In the top left quadrant, comments that we could characterise as pedantry. People have their preferences about whitespace, naming conventions, etc but really you just need to pick some conventions and stick with them. These are not comments on the code per se and they don’t need a code review to pick them up; there are many automated tools that can identify style rule violations and correct them. The perceived pettiness of these comments is where their potential for conflict arises, as well as their contribution to the overall volume of comments, volume itself being a cause for defensiveness. Automate away this type of feedback and keep the code review focused on things only a human can pick up.

In the bottom half of the graph, defects. I place things like bugs and missing tests in the ‘low conflict’ bracket precisely because there is no sense of ownership over these things. No-one made an active choice to introduce a bug, or implement the wrong functionality, so they’re unlikely to feel defensive about fixing it.

The top right quadrant of the scatter graph above speaks to those fairly unquantifiable, but worthwhile things like code readability, DRY factor, design pattern choices etc. This type of comment can’t be automated away, has a high payoff, and is the most direct comment on an author’s choices. Hopefully by minimising other types of issue we can retain some collaborative goodwill for issues in this category. But how do we ensure that discussions arising in this way are productive and don’t deteriorate into dysfunction?

Conflict & Collaboration

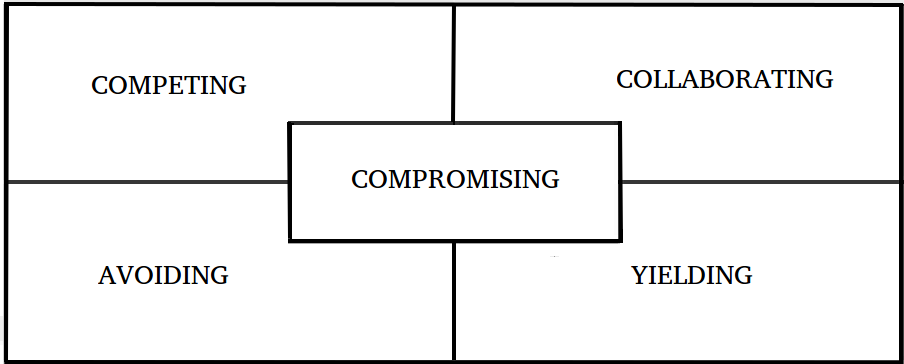

Conflict resolution theory teaches that there are 5 archetypal ways to respond to conflict: Avoiding, Yielding, Compromising, Competing and Collaborating.3

If someone is reluctant to submit code for review at all, or treats code review as a formality, just signing off others’ code without comment, you may be witnessing an Avoidant or Yielding approach to conflict. On the other hand, code reviews can be a forum for Competing, manifesting in aggressive or insensitive language, an unnecessarily large volume of comments left while reviewing, or a total unwillingness to implement suggested changes as an author. And of course having one member of the team who is competitive might induce others to become yielding or avoidant, because the conflict becomes more unpleasant. Collaborating, with active participation from all involved, is the optimal approach that we’re all keen to incubate.

Understanding one’s own tendencies can go a long way to addressing them, as well as understanding the tendencies of our coworkers. A yielding or avoidant person can be induced to collaborate by raising their confidence. A competitive person needs to have their defences lowered. But really everyone can benefit from these two golden rules of raising confidence and lowering defences.

As an organisation we can…

- Encourage code pairing, through which authorship and ownership are shared.

- Discuss tasks prior to implementation. If introducing a new feature, or refactoring using a new design pattern, discuss this first as a team.

- Avoid 1 person per project structures. Keep developers moving between codebases; it may be tempting to let the person most familiar with a codebase or a particular feature always pick up related tasks, but this is a false economy; code quality will be improved by having a diversity of inputs.

- Emphasise a sense of teamwork at all stages; bugs are never one person’s fault, successes are the team’s successes.

- Make sure everyone reviews code, and everyone has their code reviewed. Code reviews should never be about singling people out.

As a reviewer we can…

- Never say “you”. Comments can always be rephrased with “we” as the subject, or in a neutral way with the code as the subject. For example,

- a) “You need a unit test for this” -> “We should unit test this”

- b) “Why did you do it this way?” -> “What are the advantages of this approach?”

- Phrase things as questions; as well as sounding friendlier, you may actually be wrong in your first impression. So “We already have a function x that does this” -> “How does this functionality differ from function x?”/ “Can we re-use x here?”

- Should go without saying, but never ever insult someone, or use insulting language about their code. Straight up negative comments like “This code is a mess” serve no purpose other than to get the author’s hackles up. If code is really so sub-par that a few minor improvements won’t make it passable, this is an issue that can’t be settled by code review and should be taken offline. The author probably needs more support at an earlier stage, through training, code pairing, mentoring etc.

- A large volume of comments, even if each is sensitively phrased, can feel like an attack on the author. One idea by Michael Lynch is to try to raise code quality by no more than one or two letter grades:

“I privately think of the code in terms of letter grades, from A to F. When I receive a changelist that starts at a D, I try to help the author bring it to a C or a B-. Not perfect, but good enough.”3

Prioritise essential comments, and accept passable code even if it isn’t perfect. Raising code quality is a process that happens over time through training and mentoring; it can’t happen through code review alone.

-

And finally, make positive as well as constructively critical comments on a code review. It’s generally very easy to find something positive to say, for example:

“This is a neat library you found”

“I didn’t know this function existed, thanks for bringing it to my attention”

“This is really easy to follow, thanks!”

“Test coverage looks really comprehensive”At the very least, you can always say thank you to the author for their hard work!

As an author we can…

- Practice the ‘as if’ technique, an exercise in imagination and self-control: Imagine how you would feel and act if things were different. Some examples:

- How would you respond if you were in your best mood?

- If you were not the author of the code?

- If you knew that the reviewer was having a bad day and it wasn’t personal?

- If you were an even tempered friend or co-worker you admire?

- If the review comments were phrased differently?

- Say thank you. This is both an implementation of the ‘as if’ technique (the act of saying ‘thank you’ can trick your brain into feeling more genuinely grateful), and a way to disarm the reviewer -the best way to nudge another person towards co-operation is to be co-operative yourself.

- Annotate your pull request first. That way you can explain your choices, and invite conversation on the points you think merit discussion.

Summary

A code review is a dialogue, but it is also a critique of a person’s work, and both reviewer and author should bear in mind the psychology of ownership and criticism. Some of the most valuable things that come up during code review are things which different developers can reasonably disagree about, and discussing such things is exactly how we learn and innovate.

A couple more blogs on this subject that I enjoyed:

1. https://mtlynch.io/human-code-reviews-1/

2. https://css-tricks.com/code-review-etiquette/

Does any of this ring true for you in your experience? Have you tried any of the approaches I mention here? I’d love to hear more about how other teams navigate the code review process!

1. https://blog.codinghorror.com/code-reviews-just-do-it/

2. https://en.wikipedia.org/wiki/Endowment_effect

3. Based on the Dual Concern model

4. Having said that, we still don’t want a deluge of these comments to overwhelm the author. Some suggestions for optimising the conversation around defects:

- Keep code reviews small. This means under 400 lines of code. The code changes should be focused on one feature only. (This is a future blog post in itself – the art of creating a 400 line pull request!)

- Ensure you have complete test coverage before submitting your code for review. Unit tests catch bugs better than reviewers!

- Authors should review their code first. Annotating a pull request can catch a lot of defects before even handing off to reviewer.

5. https://mtlynch.io/human-code-reviews-2/#aim-to-bring-the-code-up-a-letter-grade-or-two

Thanks for this nice article, I couldn’t agree more with what you are saying. For years I’ve seen the code review (I’m the CTO) as a “Full Metal Jacket boot camp” exercise so I avoided it, but as I am reading more blog post about it, I think we missed something. What medium do you think is the best for code review ? synchronous/direct or asynchronous ? What percentage of time pased on review is good ? 5-10% ?

Glad you found it helpful, and thanks for the comment. I personally would recommend “indirect” code reviews (i.e. written comments rather than in-person) for a few reasons.

1. You need to give a code review enough time (IBM recommends at least an hour for a 400 line changeset: https://www.ibm.com/developerworks/rational/library/11-proven-practices-for-peer-review/index.html). Written reviews ensure the reviewer can take their time and go at their own pace.

2. In person the reviewer may feel pressure to act like they understand the code even when they don’t. This is related to the first point, because they may also feel pressure to go at the author’s pace, which will be too quick.

3. During a written review, the reviewer can edit their comments at the end to make sure they’ve communicated effectively and sensitively.

That IBM article is worth reading in full for details about optimal changeset size, time spent etc. Overall I’d say around 10-15% of a developer’s time spent code reviewing sounds about right (and not more than an hour at a time.)

I love the summarization you have under “Conflict and Collaboration”. I have a similar picture from my understanding. Thanks for sharing.

Very well written post! Liked the reward/ conflict quadrants as well as the examples to make code review a better experience.

Still one of my favourite reads on software development ever!

Do you see the same applying in the wider interactions amongst developers, e.g. commenting answers on forums etc.? There is a fine line between sharing complementary knowledge (‘You could also solve this using…’) and showing off knowledge. Before posting something, I’d be tempted to check where in this quadrant my comment would fall 😉